Using ontologies

Posted on 2022-03-23 by Anabasis

Translations: frCategories: Technology

Introduction

It is difficult today to imagine a company without an information system (IS). Management data, accounts, customers, products, stocks, it is often even several IS that are called upon to discuss with each other.

However, these different IS, although dealing with common concepts, represent them in very different ways. This problem is exacerbated when different companies need to communicate with each other about the same objects, say train tickets.

You and I have a vague idea of what a train ticket is.

A ticket is linked to a journey, comprising a departure station, an arrival station (or even several journeys that are compatible with each other if there are connections, etc.).

It is also a validity date, time slots of validity, or even in the event of a reservation, a single train, with its number, its schedules (planned ;-), perhaps a seat number, perhaps not .

The ticket is also attached to a single person, to a subscription, to a category of person (reductions).

And I'm not even talking about related topics (billing, exchange conditions, etc.)

So, if ever a travel agency tries to discuss with several railway companies (ticket sellers) from different countries, each with their own different model adapted to their context, we suspect that the SIs will have a hard time talking to each other.

However, we also understand that these different actors are talking about similar things, and that a sufficiently generic model should facilitate their exchanges. How such a generic model could do the trick? How to be sure that this model is indeed built along with the technical experts, comprehensible and validated by them? And this is what Anabasis' core business consists of: designing and developing knowledge representation models allowing the interconnection of IS as well as a complex and semantic processing of data.

Link with trades

The case of train tickets discussed above seems familiar to everyone, but the same is not true for very specific issues concerning a manufacturing process with its complex machines and multiple parameters.

As a general rule, knowledge of the trade is held… by the trade. With its vocabulary, its practices, its logic, its regulations, its constraints and its possible interactions with other services or professions on which it depends or which it influences.

Business expertise, whether technicians, engineers, or managers, is present in many forms: in technical documentation, fragmented in the memory of several experts, even in a dark macro deep inside a spreadsheet written by a former collaborator and which will soon no longer work with the new version of the office suite.

This expertise, if the company does not deploy colossal means, on the scale of its complexity, to transmit it, is lost. No one knows how to use the spreadsheet macro anymore. Knowledge, often empirically acquired, implicit is lost instead of being perfected.

Different projects require the collection of this knowledge. For example, the creation of new business software to automate or formalize processes involves consulting experts. However, software developers are not business experts. In order to reduce unpleasant surprises at the end of the project, short cycles are put forward, in particular by the Agile method. A shortcoming of this method is that it requires a lot of time from experts, because specifying how software works is generally not part of their skills.

Modeling business knowledge makes it possible to discuss with experts in a language with which they are familiar, and thus to communicate more effectively. It is therefore sufficient for the experts to validate or amend the functional specifications. The formalization of these is no longer their responsibility, it is carried out by knowledge engineers.

Knowledge engineering

The formalization of the business need by the knowledge engineers first of all involves a static modeling of the business universe. This modeling, which is high-level and therefore understandable by everyone, has the great advantage of being directly exploitable by computer.

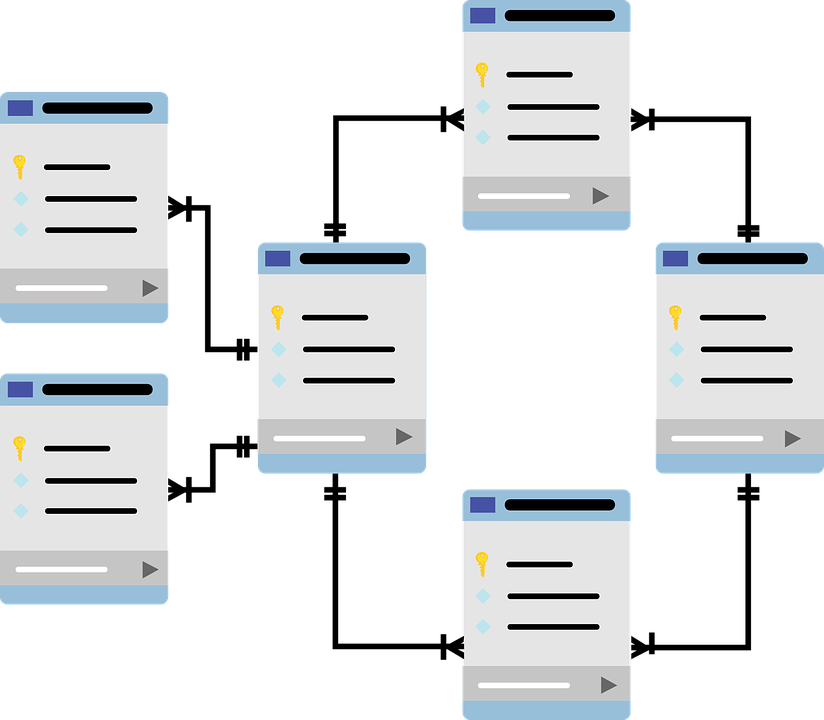

The modeling is thus based on a global diagram of the business universe: the key elements handled by the experts are called concepts, detailed by properties. These concepts are interconnected by relationships. For example, the concept train ticket could be linked to a concept train company by a relation is-emitted-by. This schema is expressed using a structured language for knownledge representation respecting the standards of the semantic web, in our case OWL (https://en.wikipedia.org/wiki/Web\_Ontology\_Language).

Modeling can be pushed a step further and become dynamic by writing first-order logic rules that manipulate the elements of the modeled business universe, of the form:

If such concept has such property or relation then we can deduce such new relation or property.

If the ticket train leaves after 10 p.m. and arrives the next day, then it is a night train.

If the ticket price is not linked to a subscription, then an exchange the day before departure costs €50.

We have developed our own language in Anabasis, Kalamar, allowing us to both declare concepts and rules.

The execution of these rules is made possible by the instantiation of concepts and relationships with data from the business and a reasoning engine which makes it possible to resolve all the "constraints" specified by these rules. These rules make it possible to construct new information and thus enrich the knowledge base. The base is ultimately constituted by the instantiation of the modeling and the addition of the deductions obtained by the execution of the rules. You can then interrogate this database directly by means of queries, or even build an interface that allows you to display selected results to users.

The essential point is to ensure that the calculated information returned is relevant in the sense that it correctly and completely addresses the questions arising from the needs of the business experts.

For this, the work of collecting expectations is crucial, whether these are those of the experts or the needs of the client, because let us specify, the experts and the clients may not be the same people, not always bearing the same vision on the problem to be treated. The customer need must therefore be captured in its business environment, as a whole, with the help of documentation and experts.

This work takes place in a context where there is a divide between the world of IT teams, especially external ones, and the world of business and customer teams. There is no common way to link these two teams, and the mismatch between the expectations of one and the achievement of the other is one of the major problems of the IT department today.

The desire of knowledge engineering thus relates to the importance of correctly formalizing the business need to overcome this divide. This is a delicate task, the experts themselves not always knowing how to express their expectations. It is of course difficult to correctly process something badly expressed by computer, and very easy to process something formalized by computer... but how can you guarantee that the proposed formalization is correct?

The approach of knowledge engineering allows this in several points.

First of all, the continuous questioning of professions in their own vocabulary makes it possible to regularly collect elements, then analyzed and sorted, which are refined over time. To do this, the questions asked must provoke reactions from the experts in order to encourage them to express themselves on points that are a priori not obvious, with a view to detecting informal elements. Looking like a jerk with questions designed to get "but no, that's what to do instead let's see" is a good way to get your way ;)

But in order to be able to ask relevant questions, which aim accurately, paint an overall picture of the business context and not abuse the time of the experts, a work of appropriation of the technical or legal documentation of the business is necessary. Understanding the business need and the first basics of the context, however, requires an incompressible time to study the field, and let's face it, very approximate and abundant first drafts of the modeling. An in-depth reading of Marie Kondo's work is therefore strongly recommended.

These two aspects make it possible in the end to set up a continuous presentation of the modeling (concepts and rules executed), and to establish a virtuous loop restitution/questioning/appropriation/construction.

This process allows the continuous creation of a dynamic modeling, called ontological, which grows and changes little by little throughout the project until stabilizing at the end. Of course, HMI work to render this ontology must accompany the knowledge engineering approach so that the client can visualize the results and, ultimately, use a product that corresponds to him.

These processes may seem cumbersome, but they shouldn't be. The knowledge engineer operates with discretion, far from the classic collection workshops usually used by other professions. The approach aims to be non-intrusive and optimize the time spent by customers and experts. Any interaction has a precise objective, collection or validation by concrete results, obtained by execution of the modeling, in a very limited time. It's agility, taken to the extreme, that once again takes precedence.

Conclusion

In short, knowledge engineering is a continuous whole of tools and methods, carried out in constant contact with the client and its business experts, in compliance with the needs, constraints and limited time of the interlocutors, with the aim of restitution and correct processing of the client's needs.

To go even further :

- OWL a language for describing ontologies.

- Protected a graphical OWL editor for designing ontologies.

- SWRL a language allowing to write rules working on an ontology.

Sabrina and Fabien,

Knowledge engineers

Explainable AI in all its states (or almost!): a few use cases

2024 marks a turning point for Anabasis: after successful production launches with key customers, we needed to take a step back, beyond the sectoral or business applications of our Karnyx expla

Explainable artificial intelligence and Semantic Web tools - Part 2

In the previous post, we focused on RDF-style knowledge graphs, the basis of Anabasis technologies. Now we a

Retour sur la participation d'Anabasis à SemWeb.Pro 2023

Parce qu'Anabasis Assets a construit sa suite logicielle sur les normes et standards du web sémantique (entre autres), il était naturel de participer à la Jou